Open Lab 2024

Friday, 06. December 2024: 17.00 -19.15 The yearly event open to students to learn about the ongoing projects.

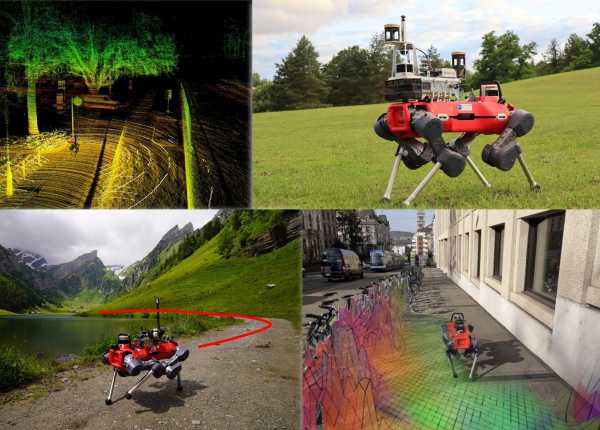

Once a year we open our lab doors and invite students and the public to visit the ongoing research projects. Professor Roland Siegwart and Professor Marco Hutter will be on site. All members of both groups will be pleased to answer all your questions and will demonstrate their projects

Schedule:

16:30 - 19:30 check-in at LEE, main entrance on Leonhardstrasse 21. Pick up your wristband to allow your access to H & J-floor.

Group: A

17:00 - 18.00 visit LEE H and J floor

Group: B

18:15 -19:15 visit LEE H and J floor

After 19.15 the floors will be reserved for the lab family and invited guests.

The space on each floor is limited (security reason). Everyone needs to be registered: students from 17.00-19.15 / guests from 19.15 onwards

Registration is closed!

if you registered and will not be able to come, please deregister, to give the space to another student. Send an email to : mariat@ethz.ch

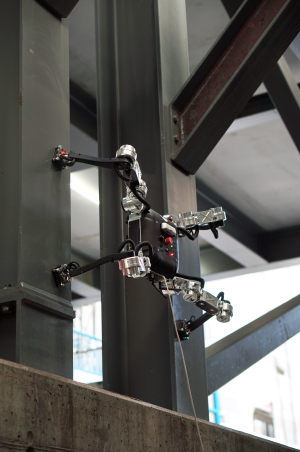

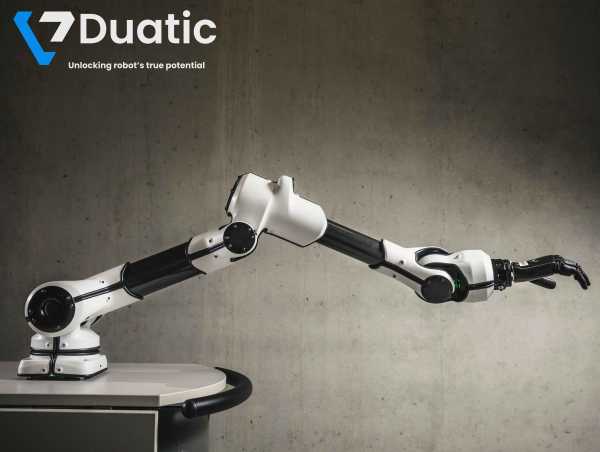

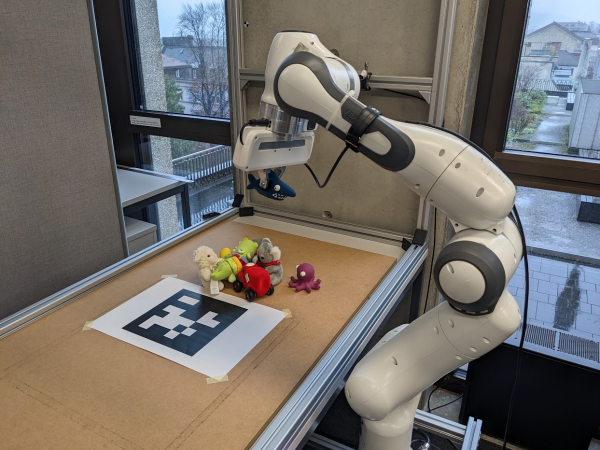

Meet the researchers of the ongoing projects